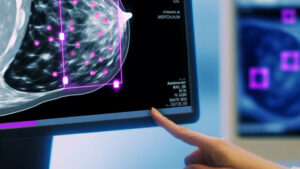

Breast Cancer Detection with Computer Vision and Image Processing

The analysis of mammographic images is a critical task that relies on experienced radiologists to identify subtle signs of malignancy, often hidden within complex tissue patterns. However, the high volume of images and the variability caused by radiologist fatigue pose challenges to maintaining accuracy and consistency. To address these issues, Machine Learning (ML) object detection models, combined with advanced image processing techniques like CLAHE, have been introduced to enhance visualization and automate abnormality detection with precision. This integration, supported by the collaborative efforts of UoM and UDG, has significantly optimized diagnostic workflows, reducing analysis time and improving early detection, marking a transformative step in modern healthcare diagnostics. Read more at [link].

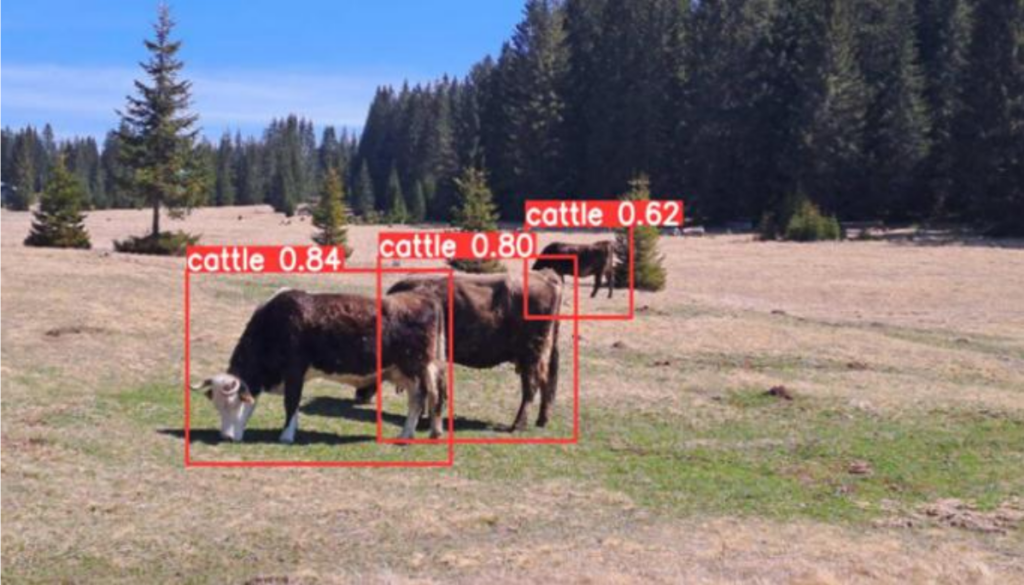

Livestock Monitoring with Edge AI and High-Performance Computing

Livestock farming is vital to many economies (including Montenegro) but faces high labor costs, resource inefficiencies, and slow adoption of modern technology. Farmers traditionally monitor herds by manually counting and inspecting animals – a time-consuming and costly process that strains resources. On large farms with hundreds of animals, keeping an eye on each cow or sheep is daunting and prone to human error. Issues such as stray, lost, or ill animals might go unnoticed until it’s too late, jeopardizing farm productivity and animal welfare. Clearly, there was a need for an innovative solution to augment farmers with real-time, automated livestock monitoring. The challenge was not just detecting animals with precision, but doing so efficiently in remote farm environments – a task requiring a leap in computational capability and reliable operation outside the lab. High-Performance Computing (HPC) offered a way forward, providing the computational muscle to develop advanced AI models that could meet these demands. Read more at [link].

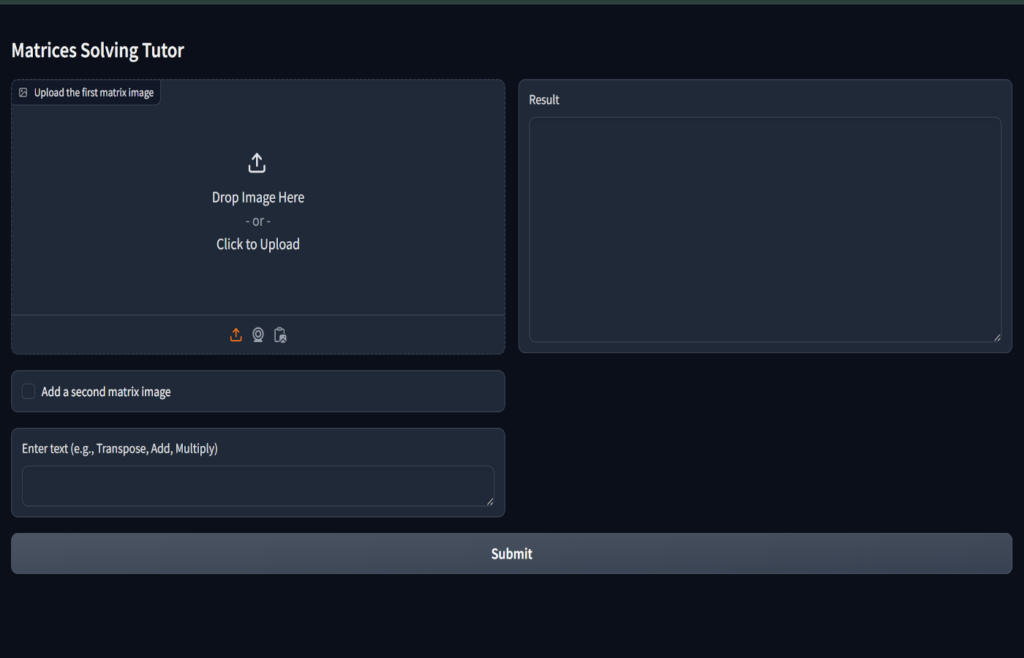

HPC-driven Development of AI Tools for Matrix Problem Solving

Understanding matrix operations is a notorious stumbling block for many students. Despite the foundational importance of matrices in disciplines like mathematics, engineering, and computer science, learners often struggle with their abstract nature and the step-by-step complexity involved in solving matrix problems. Traditional teaching methods (e.g. lectures and textbooks) usually lack interactivity and timely feedback, which can leave students confused or disengaged when they hit a roadblock. A common scenario is that a student works through a matrix calculation on paper, but without immediate feedback they might not realize a mistake until much later. This absence of instant guidance makes it harder to pinpoint errors and grasp core concepts. The challenge was clear: how to provide students with a more interactive, supportive way to practice matrix problems, so they can learn by doing with confidence that mistakes will be caught and corrected in real time. Read more at [link].

AI-Powered Poultry Farming: Enhancing Accuracy with Synthetic Data

Modern poultry farming increasingly incorporates deep learning techniques to automate tasks such as bird detection, animal counting, behavior analysis, and mortality detection, aiming to optimize production and ensure animal welfare. While these technologies offer significant potential for improving efficiency, sustainability, and resource management, their effective implementation faces challenges. Key among these are the variable conditions in farm environments, the scarcity of data for training robust models, and the labor-intensive nature of traditional monitoring practices. To address these challenges, this study explores the use of computer vision and generative artificial intelligence (GenAI) to enhance animal detection and tracking, enabling more accurate and scalable farm management. By leveraging AI-driven solutions, farmers can gain data-driven insights and automate processes, reducing costs and improving productivity in modern poultry farming. Read more at [link].